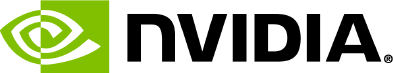

viisights’ innovative behavioral recognition video understanding technology is based on a unique implementation of deep neural networks. These AI-driven networks are capable of analyzing and deducting high-level concepts derived from video content.

viisights is developing unique video understanding technology that is capable to understand video content in a very similar way to humans viisights technology uses state-of-the art

computer vision artificial intelligence which is using a unique architecture that detects objects and their attributes, and on top of that focuses in detecting their behavior in non-sterile

environment, like a city street. This architecture is capable to detect dynamic scenarios like events (e.g., people fighting), actions (e.g., person holding a rifle in threatening position) and

scenes (e.g., brawl in a crowd).

On top of that technology viisights is building products for the markets of video security & safety which offer significantly more relevant, accurate and advanced features than common analytics

systems which are mostly based on static classification of objects. This approach manifests itself by. viisights capability to detect how many people are in an area and on top of it detects what

the people are doing and how they interact with each other.

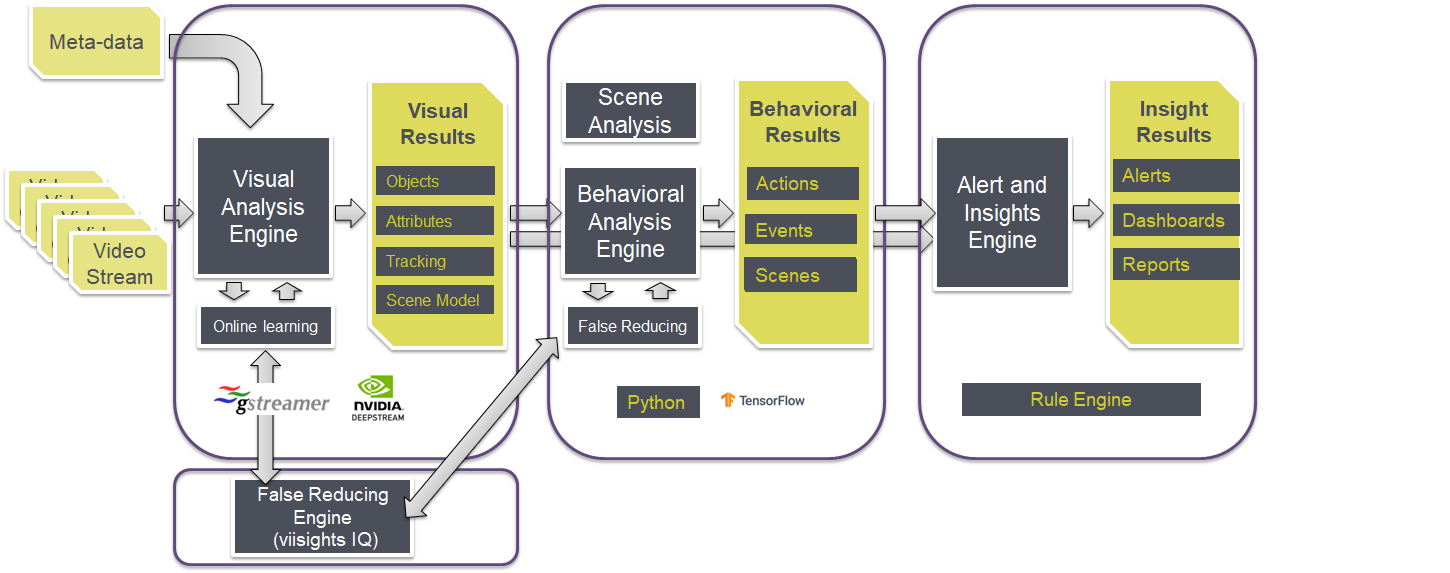

viisights technology is based on proprietary machine learning algorithm trained on both images and video clips. The trained inference engine uses temporal analysis and holistic view of

complex scenes with multiple objects interacting between themselves. All these is done in real-time in a highly efficient way, thus having low hardware footprint and low cost per live stream.

The integration of multiple deep neural networks and the holistic analysis of multiple dimensions of video understanding, creates a significant challenge in terms of throughput and scalability processing.

viisights overcomes these challenges in a two-folded approach:

Using NVIDIA GPU processors which provide the demanding processing power required by the system.

Incorporating a unique system architecture that significantly shortens the processing time of each analysis aspect, thus allowing the system to complete the holistic analysis in near real time.